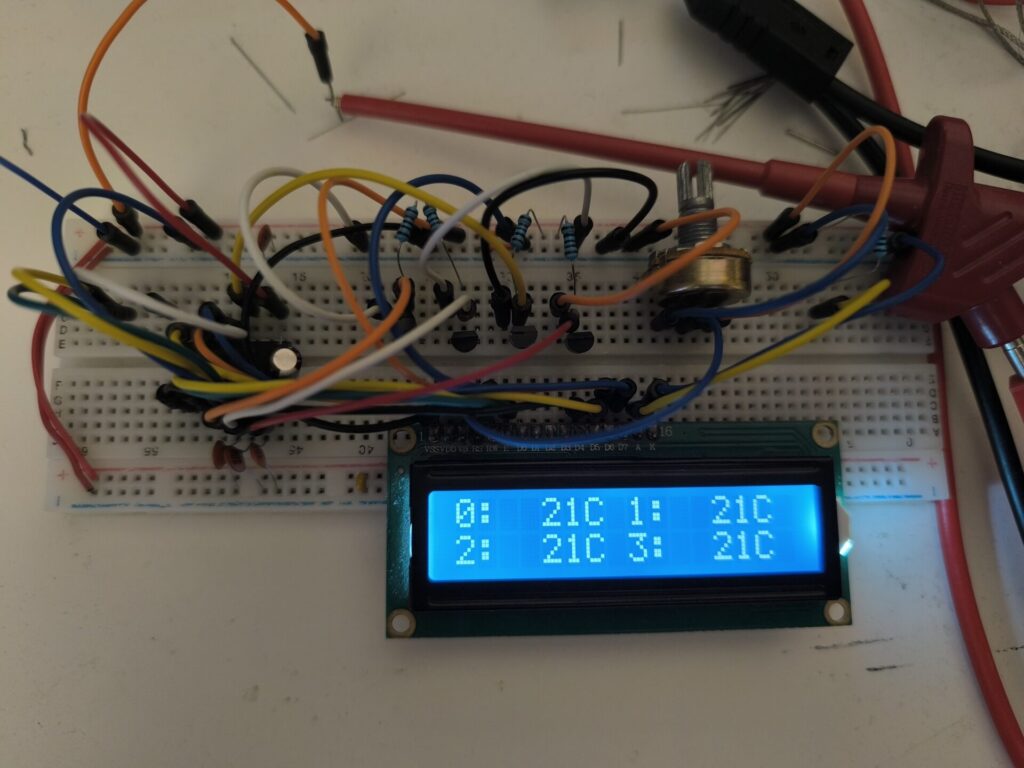

With the release of the new Claude 3.7 Sonnet (mostly) coding model, I decided to try it out with an embedded project. I wasn’t in the mood to do some studying, so I wanted to build a circuit, get my hands dirty. I’ve always wanted to build a multi-channel room-temperature thermometer and saw it as an easy enough challenge for Claude. Normally, I’d say that a project like that, from start to finish, would take me 1-2 hours to build with the chosen parts:

- Microchip PIC16F1705 microcontroller

- Generic 16×2 character display

- LM235Z temperature sensor

I can confidently give this estimate because I’ve done almost this exact same project in the past already – and this is also why I found this challenge interesting. What would it do differently from me? If you want to skip to the conclusions, these are the repo links:

snikolaj/pic-thermometer (original)

snikolaj/pic16f1705quadthermometer (new)

The work itself

Initially, I did the register setup in MPLAB X myself. I knew what I wanted to accomplish, so I gave custom names to all of the IO pins so that the AI knew the physical interface to the world, I set up the ADC (with one intentional register error!), I set up the internal voltage reference, and set the initialization registers. What the AI had to do was:

- Set up the LCD with a 4-bit interface

- Read and scale the ADC inputs

- Format and output the ADC readings to the LCD

I gave it this list, described the parts some more, and told it what to do. Shockingly, it did the whole thing in a single prompt. I’d heard of Claude 3.7 Sonnet’s tendency to one-shot, but this was just incredible to see. I uploaded and ran the code, and it almost worked perfectly. The intentional error I introduced – that also tripped me up when I first wrote my code for the PIC16F1705 – was the alignment of the ADC reading. The 10-bit ADC can either be aligned to store those 10 bits from left to right (keeping the 6 rightmost bits 0), or vice versa. The left alignment is useful due to the fact that in most ADCs, the lowest 1-2 bits are unusable by default, so only reading the upper 8 bits (and accounting for this) gets you the most realistic result.

In my case, because each ADC reading corresponded to 40.00mV (based on an internal voltage reference of 4.096V and 10-bit ADC), while the temperature sensor output 10mV/degree Kelvin, losing the bottom 2 bits would quantize my reading to 1.6K, which is annoying. Since the errors are least pronounced at around 25C anyway, I knew that aligning the reading to the right would be the way to go. The auto-generated documentation also clearly stated that the result was left-aligned. Yet… Claude failed to notice this and treated the left-aligned value (which would basically be shifted by 6 bits/multiplied by 64) as right-aligned, which initially gave a predictably astronomically high reading. I noticed this and fixed it.

Another (this time unintentional) bug it failed to notice was that the ADC acquisition time was set too low, so the ADC reading was unstable. I, naturally, used the ancient principles of engineering and debugging to find that out. Nevertheless, I’d run a similar experiment a few months ago, and no AI could write anything remotely good in the embedded world. Now, it seems to be getting the firmware down very well.

Additionally, the overly-ambitious Claude also wrote a nice README document outlining how the project works, unprompted. The README was actually quite good and is basically tedious work that I’d done manually in the past.

Overall, I was very pleasantly surprised by Claude, but I recognize the long way to go. The main issue in this case would come down to how well it can read and understand documentation. Much of EE design work is tedious documentation slogging, so if AI can improve that, as well as write a 16×2 LCD library for the billionth time, I’ll be extremely happy. For me, the main fun in building circuits is doing the electrical design, while the firmware is something I just have to do. Even though Claude isn’t yet perfect at this, it’s far better than everything a few months ago, and it can only get better in the future.

A discussion on AI

Now, there is also an interesting discussion to be had over code quality. One thing I noticed with Claude 3.7 (opposed to 3.5) was its tendency to go with extremely overengineered solutions. In my case here, it tried to use a bunch of fancy C techniques and floating-point math where it just wasn’t necessary. I didn’t care about the floats, since if I paid for the entire Flash and RAM, I’m going to use it, and the project hit its feature ceiling anyway, but I’d be very careful with using Claude on constrained embedded projects. Another specificity of Claude 3.7 is that the quality of subsequent responses after a one-shot code tsunami tends to drastically decrease. Restarting commonly seems to help it a lot. I assume that one-shotting fills up its context very quickly, and it starts hallucinating. Still, in its first shot, it got ~95% of the code done. I could easily handle the rest.

Nevertheless, this leads to the conclusion of this surprisingly short adventure. I see a lot of worry about AI in embedded or low-level environments causing issues. As a response, from doing some thinking over these past few months, I’d treat AI as a beginner employee, with the following principles:

- Try to cover as much as you can of the AI-generated code with tests that you write yourself. If code passes the tests, and looks normal, then it’s probably normal.

- Try to give it tasks that are limited in scope. Try to keep all context regarding a single task about that task only.

- Give it as many details and information about your project and goals as possible. It has some creativity and a lot of previous knowledge. It can suggest ideas that can disrupt your long-term accumulated patterns.

- Tell it what you want it to do and what you don’t want it to do. If you tell it to not write code, but give you suggestions, it’ll do that.

- Manually cover all code with at least a glance. Never use code that you don’t understand. I personally may not know the syntax of many functions, but I always make sure that I know exactly what the AI is doing. This is also essential because you’re not risking your project going wild when an AI starts to hallucinate based on its hallucinations.

- Always keep backups and version history. You will get chains of hallucinations that can ruin your day if you forget.

- Know that sometimes – many times – it’s just faster and easier to do it yourself. AI is great for something like a 16×2 LCD library – I’ve written those at least 3 separate times, one of which was in Z80 assembly. I don’t want to do it again, and it’s tedious and well-documented work already. But getting frustrated and then having to delete everything and do it manually is much more annoying than just doing it yourself. I personally like the idea of letting Claude 3.7 one-shot a solution, and just finishing the rest myself.

- You don’t have to automate everything. If you enjoy it, and you don’t need perfect efficiency, don’t automate it. Many people like many different things in this sphere – I just don’t like firmware much myself. That doesn’t mean that you don’t like it. You don’t have to do any of this. I still occasionally write something in Z80 or 8051 assembly, even though both the microcontrollers and languages have been obsolete for decades. Life’s too short to replace the things you like doing with AI.

Another question I’ve seen is where the line between you developing a device and AI developing a device is. What does it mean for you to be the one to develop something? If the AI wrote most of the code, does that mean that you’re not the developer? I find this discussion interesting, but ultimately, it has no real impact on the real world. If you’re working, our system only cares if you can provide a thing and get paid for it. Almost nobody cares if you wrote your thing or someone else wrote your thing, as long as you can understand it and use it. I have no issues with using AI personally as long as it’s writing code that I could’ve written (and usually have written) before. However, I understand that people may feel uneasy having most of their code written by not-them. Yet, given how much code has been written by “StackOverflow developers”, and the world is still spinning, I think that those concerns will be crushed by the onslaught of AI. This all comes to a bigger conversation about our efficiency-obsessed world and how much it alienates us from the fruits of our labor, but everyone keeps voting for the parties that perpetuate the system with a different color scheme, so if you’re not fighting against all of them, I personally don’t think you have a say in this conversation.